|

|

|

|

|

|

|

| |

|

|

|

|

|

| |

Embedded

models-

MIDAS

|

| |

|

|

|

|

|

| |

MIDAS

contains

representations

of

human

cognitive,

perceptual

and

motor

operations

in

order

to

simulate

control

and

supervisory

behavior.

These

models

describe

(within

their

limits

of

accuracy)

the

responses

that

can

be

expected

of

human

operators

interacting

with

dynamic

automated

systems.

The

fundamental

human

performance

elements

of

these

representations

can

be

applied

to

any

human-machine

environment.

Tailoring

for

the

particular

requirements

of

a

given

domain,

largely

in

terms

of

human

operator's

knowledge

and

rule-base

is,

of

course,

a

necessary

step

as

the

model

is

moved

among

domains.

Each

of

the

human

operators

modeled

by

MIDAS

contains

the

following

models

and

structures,

the

interaction

of

which

will

produce

a

stream

of

activities

in

response

to

mission

requirements,

equipment

requirements,

and

models

of

human

performance

capabilities

and

limits.

Physical

Representations:

An

anthropometric

model

of

human

figure

dimensions

and

dynamics

has

been

developed

in

conjuntion

with

the

Graphics

Laboratory

of

the

University

of

Pennsylvania.

The

model

used

is

called

Jack

,

and

is

an

agent

in

the

overall

MIDAS

system.

The

Jack

agent's

purpose

is

to

represent

human

figure

data

(e.g.,

size

and

joint

limits)

in

the

form

of

a

mannequin

which

dynamically

moves

through

various

postures

to

represent

the

physical

activities

of

a

simulated

human

operator.

The

graphic

representation

of

the

Jack

agent

also

assists

designers

in

questions

of

cockpit

geometry,

reach

accommodation,

restraint,

egress,

and

occlusion.

Perception

and

Attention:The

simulated

human

operator

is

situated

in

an

environment

where

data

constantly

streams

into

the

operator's

physical

sensors.

While

auditory,

haptic,

and

proprioceptive

systems

serve

an

important

role

in

the

perception

of

information

relevant

to

the

operator

of

vehicles,

within

MIDAS

the

present

focus

has

been

on

modeling

visual

perception.

In

brief,

during

each

simulation

cycle,

the

perception

agent

computes

what

environment

or

cockpit

objects

are

imaged

on

the

operator's

retina,

tagging

them

as

in/out

of

the

peripheral

and

foveal

fields

of

view

(90

and

5

degrees,

respectively),

in/out

of

the

attention

field

of

view

(variable

depending

on

the

task),

and

in/out

of

focus,

relative

to

the

fixation

plane.

An

environmental

object

can

be

in

one

of

several

states

of

perceptual

attention.

Objects

in

peripheral

visual

fields

are

perceived

and

attentionally

salient

changes

in

their

state

are

passed

to

the

updatable

world

representation.

In

order

for

detailed

information

to

be

fully

perceived,

e.g.,

reading

of

textual

messages,

the

data

of

interest

must

be

in

focus,

attended,

and

within

the

foveal

field

of

view

for

200

ms.

The

perception

agent

also

controls

the

simulation

of

commanded

eye

movements

via

defined

scan,

search,

fixate,

and

track

modes.

Differing

stimuli

salience

and

pertinence

are

also

accommodated

through

a

model

of

pre-attention

in

which

specific

attributes,

e.g.

color

or

flashing,

are

monitored

to

signal

an

attentional

shift.

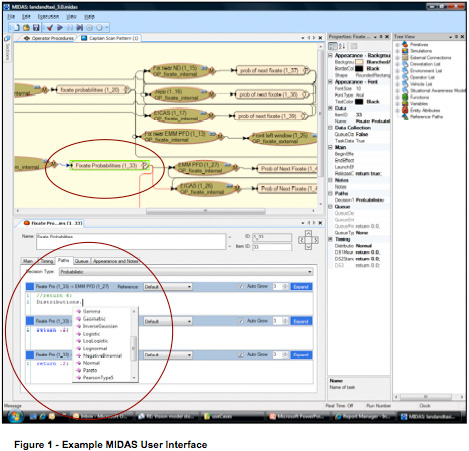

MicroSaint Sharp (Simulation Engine):

Micro Saint Sharp is a full-featured, discrete-event simulation modeling environment that simulates complex processes and solve difficult problems spanning a number of complex application domains. Micro Saint Sharp allows MIDAS to operates according to its hybrid discrete-continuous modeling principle. Micro Saint Sharp allows rapid model development through the use of flow charts and task networks. Micro Saint Sharp selects and sequences operator procedures based on information that is fed to it from the perception model inside of MIDAS. Micro Saint Sharp is a modular and flexible code base that can be used for a variety of applications. Micro Saint Sharp uses a plug-in interface and object-oriented model development, allowing easy integration with other external software applications. Micro Saint Sharp uses the Microsoft C# language which allows more complex mathematical and logical expressions and algorithms to be used in the model allowing more variable options (including integer, floating point, string, boolean, object as well as local and global variables). A key benefit to Micro Saint Sharp is its transparency through its use of an effective visualization environment and its ability to process model components in a multi-threaded fashion.

An example of the interaction between MIDAS and Micro Saint Sharp can be found below. Given vehicle location with respect to a feature in the environment, visibility and lighting levels and scan pattern, the perception model will inform Micro Saint Sharp when the perception level of the operator changes with respect to the feature. Likewise, scan patterns of the crewstation equipment coupled with dwell time effect perception level changes with respect to equipment. Given a perception level change and the operator procedures, Micro Saint Sharp may initiate an operator task to the motor control model such as a reach-object, a speaking task or a change of scan pattern. The figure below also illustrates the visual scan model within MIDAS. This visual scan model currently uses a probabilistic scan pattern that selects from a series of response distributions to accurately reflect a human’s visual scanning performance.

|

| |

| |

|

|

|

|

|

| |

The

other

analysis

path

supported

by

MIDAS

is

a

dynamic

simulation.

The

Simulation

Mode

provides

facilities

whereby

specifications

of

the

human

operator,

cockpit

equipment,

and

mission

procedures

are

run

in

an

integrated

fashion.

Their

execution

results

in

activity

traces,

task

load

timelines,

information

requirements,

and

mission

performance

measures

which

can

be

analyzed

based

on

manipulations

in

operator

task

characteristics,

equipment,

and

mission

context. |

| |

|

|

|

|

|

|

|

|