|

|

|

|

|

|

| Image Processing and Imaging Sensor Fusion (Mary K. Kaiser) |

|

Overview

The Image Processing and Imaging Sensor Fusion research effort in the Vision Group addressed two requirements defined by the aeronautics operational community:

- to enable current and future aircraft to land independently of ground facilities and in spite of reduced visibility conditions

- to investigate the feasibility of a scene-acquisition system based on imaging sensors that might allow the safe, pilot-in-the-loop operation of a supersonic civil transport lacking conventional forward-looking cockpit windows.

The first requirements arose out of a civil-aviation desire to land at airport facilities where low-visibility landing infrastructure is not provided. Only about 35 US civilian airports provided the expensive infrastructure required for a Category IIIA landing. Airlines' on-time performance and profitability would be greatly improved if a system could be designed to provide autonomous (aircraft-centered) landing guidance to their fleet, thus enabling, for example, Cat IIIA operations at Cat I/II/III airports.

The other customer for such a system was the US Air Force and the Joint Services Special Operations Command, with a requirement for the ability to land in adverse territory where no landing guidance would be provided and visibility might be impaired by battle activities (smoke, fire).

The other major thrust for the development of an imaging-sensor based aircraft guidance system was the NASA High Speed Research Program which was enabling concepts and technologies for a future High Speed Civil Transport.

Aerodynamic considerations for such an aircraft required that it should have a considerably tapered nose and that it land at very high angles of attack. These requirements combined to set the position of the cockpit back from the tip of the aircraft and hence rendered any cockpit windows useless for forward vision in the final phases of landing.

The Vision Group was involved in developing candidate technical solutions to this problem through various programs that provided researchers in the Vision Group with connections to the airframers and the customers of an eventual system to properly define its requirements, as well as connections with the manufacturers of the candidate imaging sensors to incorporate state-of-the-art technologies into the system being studied.

The Vision Group's contribution to the development of solutions to this challenge was concentrated on the following efforts:

- to provide a flexible, rapid-prototyping system within which to make it possible to evaluate the suitability of imagery from candidate sensors for this purpose as well as to evaluate the suitability of candidate processing and fusion algorithms for this imagery. This system will take the form of an integrated collection of software tools that will allow a researcher to investigate the properties of an end-to-end imaging system, from input (e.g. manufacturer provided sensor data, built-in sensor models) to processing and fusion (e.g. noise reduction and shaping algorithms, multiscale and adaptive fusion techniques) to pilot-display of the resulting image stream

- to develop processing and fusion algorithms and evaluate their effectiveness in constructing image streams matched to the performance characteristics of the pilot's visual system

- to evaluate candidate end-to-end processing systems by applying computational models of the human visual system to the output image stream; these computational models of visual detectability and discriminability of object (e.g. runway incursions) are based on currently available knowledge and ongoing research into human visual system performance parameters

The Vision Group was particularly well suited to this effort since it consisted of a mix of scientists and engineers with backgrounds ranging from basic vision research to image processing and computational techniques.

Figure 1. An image compressed by a factor of 100 using DCTune algorthms. The original image is 512x768, and its was compressed for a display visual resolution of 64 pixels/deg. To view a full color and resolution version, click on the image.

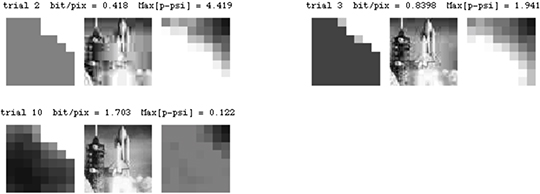

Figure 2. The figure shows several steps in the progressive optimization of an image. Each row shows: the quantization matrix (left), the compressed image (middle), and the matrix of perceptual errors (right). The optimization seeks a uniform perceptual error matrix.

Contact

Albert J. Ahumada, Jr.

(650) 604-6257

Albert.J.Ahumada@nasa.gov |

|

|

|

|