|

|

|

|

|

|

|

| |

|

|

|

|

|

| |

MIDAS 1.0 |

| |

|

|

|

|

|

| |

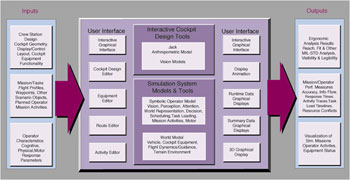

The

existing

system

contains

a

set

of

integrated

software

modules,

editors,

and

analysis

tools

produced

in

C,

C++,

and

Lisp,

with

an

architecture

based

in

agent-actors

theory.

Each

major

component,

or

agent,

contains

a

common

message

passing

interface,

a

body

unique

to

that

agent's

purpose,

and

a

common

biographer

structure

which

keeps

track

of

important

state

data

or

events

for

analysis.

This

uniform

representation

was

chosen

to

provide

modularity.

The

total

system

contains

350,000

executable

lines

of

code,

with

about

half

of

the

total

associated

with

a

dynamic

anthropometry

model.

Once

a

user

inputs

or

specifies

operator,

task,

and

equipment

characteristics,

MIDAS

operates

in

two

major

modes.

The

first,

Interactive

Mode,

supports

scenario-independent

layout

of

the

crew

station

for

assessments

of

visibility

and

legibility,examination

of

anthropometric

characteristics,

and

analyses

of

cockpit

topology

and

configuration.

The

output

of

MIDAS

in

this

mode

corresponds

to

cockpit

geometry

and

external

vision

design

guides,

such

as

MIL-STD-1472

and

AS-580B. |

|

User

View

of

MIDAS

(Click

for

Larger

View)

|

| |

| |

|

|

|

|

|

| |

The

other

analysis

path

supported

by

MIDAS

is

a

dynamic

simulation.

The

Simulation

Mode

provides

facilities

whereby

specifications

of

the

human

operator,

cockpit

equipment,

and

mission

procedures

are

run

in

an

integrated

fashion.

Their

execution

results

in

activity

traces,

task

load

timelines,

information

requirements,

and

mission

performance

measures

which

can

be

analyzed

based

on

manipulations

in

operator

task

characteristics,

equipment,

and

mission

context.

MIDAS

contains

representations

of

human

cognitive,

perceptual

and

motor

operations

in

order

to

simulate

control

and

supervisory

behavior.

These

models

describe

(within

their

limits

of

accuracy)

the

responses

that

can

be

expected

of

human

operators

interacting

with

dynamic

automated

systems.

The

fundamental

human

performance

elements

of

these

representations

can

be

applied

to

any

human-machine

environment.

Tailoring

for

the

particular

requirements

of

a

given

domain,

largely

in

terms

of

human

operator's

knowledge

and

rule-base

is,

of

course,

a

necessary

step

as

the

model

is

moved

among

domains.

Each

of

the

human

operators

modeled

by

MIDAS

contains

the

following

models

and

structures,

the

interaction

of

which

will

produce

a

stream

of

activities

in

response

to

mission

requirements,

equipment

requirements,

and

models

of

human

performance

capabilities

and

limits.

Physical

Representations:

An

anthropometric

model

of

human

figure

dimensions

and

dynamics

has

been

developed

in

conjuntion

with

the

Graphics

Laboratory

of

the

University

of

Pennsylvania.

The

model

used

is

called

Jack

,

and

is

an

agent

in

the

overall

MIDAS

system.

The

Jack

agent's

purpose

is

to

represent

human

figure

data

(e.g.,

size

and

joint

limits)

in

the

form

of

a

mannequin

which

dynamically

moves

through

various

postures

to

represent

the

physical

activities

of

a

simulated

human

operator.

The

graphic

representation

of

the

Jack

agent

also

assists

designers

in

questions

of

cockpit

geometry,

reach

accommodation,

restraint,

egress,

and

occlusion.

Perception

and

Attention:The

simulated

human

operator

is

situated

in

an

environment

where

data

constantly

streams

into

the

operator's

physical

sensors.

While

auditory,

haptic,

and

proprioceptive

systems

serve

an

important

role

in

the

perception

of

information

relevant

to

the

operator

of

vehicles,

within

MIDAS

the

present

focus

has

been

on

modeling

visual

perception.

In

brief,

during

each

simulation

cycle,

the

perception

agent

computes

what

environment

or

cockpit

objects

are

imaged

on

the

operator's

retina,

tagging

them

as

in/out

of

the

peripheral

and

foveal

fields

of

view

(90

and

5

degrees,

respectively),

in/out

of

the

attention

field

of

view

(variable

depending

on

the

task),

and

in/out

of

focus,

relative

to

the

fixation

plane.

An

environmental

object

can

be

in

one

of

several

states

of

perceptual

attention.

Objects

in

peripheral

visual

fields

are

perceived

and

attentionally

salient

changes

in

their

state

are

passed

to

the

updatable

world

representation.

In

order

for

detailed

information

to

be

fully

perceived,

e.g.,

reading

of

textual

messages,

the

data

of

interest

must

be

in

focus,

attended,

and

within

the

foveal

field

of

view

for

200

ms.

The

perception

agent

also

controls

the

simulation

of

commanded

eye

movements

via

defined

scan,

search,

fixate,

and

track

modes.

Differing

stimuli

salience

and

pertinence

are

also

accommodated

through

a

model

of

pre-attention

in

which

specific

attributes,

e.g.

color

or

flashing,

are

monitored

to

signal

an

attentional

shift.

Updatable World Representation (UWR): In MIDAS, the UWR provides a structure whereby each of the multiple, independent human agents, representing individuals and cooperating teams of pilots and flight crews, accesses its own tailored or personalized information about the operational world. The contents of an UWR are determined, first, by pre-simulation loading of required mission, procedural, and equipment information. Then data is updated in each operator's UWR as a function of the perceptual mechanisms previously described. The data of each operator's UWR is operated on by daemons and rules to guide behavior and are the sole basis for a given operator's activity. Providing each operator with his/her own UWR accounts for the significant operational reality that different members of a cooperating control team have different information about the world in which they operate. Further, the individual operator may, or may not, receive a piece of information available to the sensory apparatus as a function of perceptual focus at the relevant point in the mission. It is of some significance that, while ideally the human operators' representation of the world would be consonant with the state of the world, in fact, this is rarely the case. The capability for both systematic and random deviation from the ground truth of the simulation world is a critically necessary component of any system that intends to represent and analyze non-trivial human performance.

The organization of perceptual data and knowledge about the world in an UWR is accomplished through a semantic net, a linked structure of object nodes that represent concepts. The relationship among these nodes is expressed as a "strength" or relatedness, where the strength of such relationships has been investigated to guide models of memory dynamics, i.e., interference, decay and rehearsal. |

| |

|

|

|

|

|

|

|

|